Deep-Sixed AI Dreams

Let’s play Never Have I Ever.

I’ll go first.

Never have I ever seen a market narrative crushed so thoroughly over a weekend.

Until Friday afternoon last week, the foregone conclusion was that AI models required Big Tech bucks to train.

Getting Google’s Gemini production ready cost $191 million. The cost to train Meta’s latest large-language model (LLM), Llama 3.1, released last July, soared into the $100s of millions. OpenAI’s GPT-4 racked up similar costs, with their next hoped-for model, Claude, exceeding $500 million—and it’s still not ready.

Only dominant technology companies flush with cash and massive profit margins could afford to swim in such spendy waters.

But, just like that, DeepSeek’s release reminded the world never to ignore the small fish because they often figure out how to beat the big fish at their own game.

And while DeepSeek crushed the high-cost-to-train narrative over the weekend, the energy required for it to solve problems reinforced another.

Which means it’s still game on for this market theme.

The Power to Reason

Large language models (LLMs) like ChatGPT use billions of text-based parameters to build a vocabulary, which requires training.

After spending hundreds of millions of dollars on training—one big power bill—the LLM goes into production and uses that vocabulary to arrive at an answer. Its thinking process involves inferring the “best fit” answer by interpolating its vast vocabulary.

When asked a question, the response should be qualified with “Based on what I’ve read, this sounds right.”

Building that vocabulary requires a lot of energy. But with a vocabulary in place, arriving at an answer takes comparatively less (though still 10X the energy for an old-school Google search).

The implied trade-off has been that LLMs have high up-front energy costs to train offset by lower ongoing energy costs to answer.

And the team behind DeepSeek blew up that assumption by shifting the burden of work further back.

I Can Infer Too

We don’t know much about DeepSeek, but here’s what I have inferred. We’ll find out soon enough whether its model performs as claimed.

Training DeepSeek relies on built-in rules to find the answer.

Those rules help it to “reason” iteratively rather than interpolate from a fixed vocabulary. In a sense, it builds the vocabulary it needs on the fly. And building that vocabulary requires a lot more energy than interpolating from a vocabulary already built.

They flipped the up-front versus ongoing cost relationship on its head.

In fact, I don’t think you can technically consider DeepSeek an LLM because it doesn’t rely on an extensive vocabulary. And you need a large vocabulary to qualify for the large language label.

However you want to label it, DeepSeek shifting the burden to the back casts the “Massive CapEx Spending to Train AI” narrative out the window while reframing the whole AI story more heavily on the “My God, We Need Tremendous Power to Run these Things” theme.

And that has two impacts.

Impact #1: Eliminating the need for massive upfront cap-ex means almost anyone can play the “Build Your Own AI” game. AI table stakes went from Big Fish money to early-stage start-up. Microsoft, Google, and Meta now have to swim alongside many small fish who are as equally competitive now that they can afford to play.

More competition means profit compression for the Big Tech companies that, until this past weekend, had the market cornered.

Impact #2: The more DeepSeek’s approach to AI model building catches on, the more power we need to support AI. It requires more power because it performs more computations.

More computations mean 1) more chips, so the NVIDIA theme still holds, and 2) more power, which means demand for oil and gas – heck, the entire energy sector – goes higher.

The AI game is still on, but being a Big Fish no longer creates an advantage.

Now back to our game. What’s your Never Have I Ever?

Think Free. Be Free.

Don Yocham, CFA

Managing Editor of The Capital List

Related ARTICLES:

American Exceptionalism, v2.0

By Don Yocham

Posted: February 12, 2025

The Holy Trinity of Growth

By Don Yocham

Posted: February 7, 2025

The War for the American Way

By Don Yocham

Posted: February 7, 2025

Draining the Moat

By Don Yocham

Posted: February 2, 2025

Deep-Sixed AI Dreams

By Don Yocham

Posted: January 28, 2025

From FUD to Oil and Gas Clarity

By Don Yocham

Posted: January 25, 2025

Waking Up to the “Drill Baby, Drill” Dream

By Don Yocham

Posted: January 22, 2025

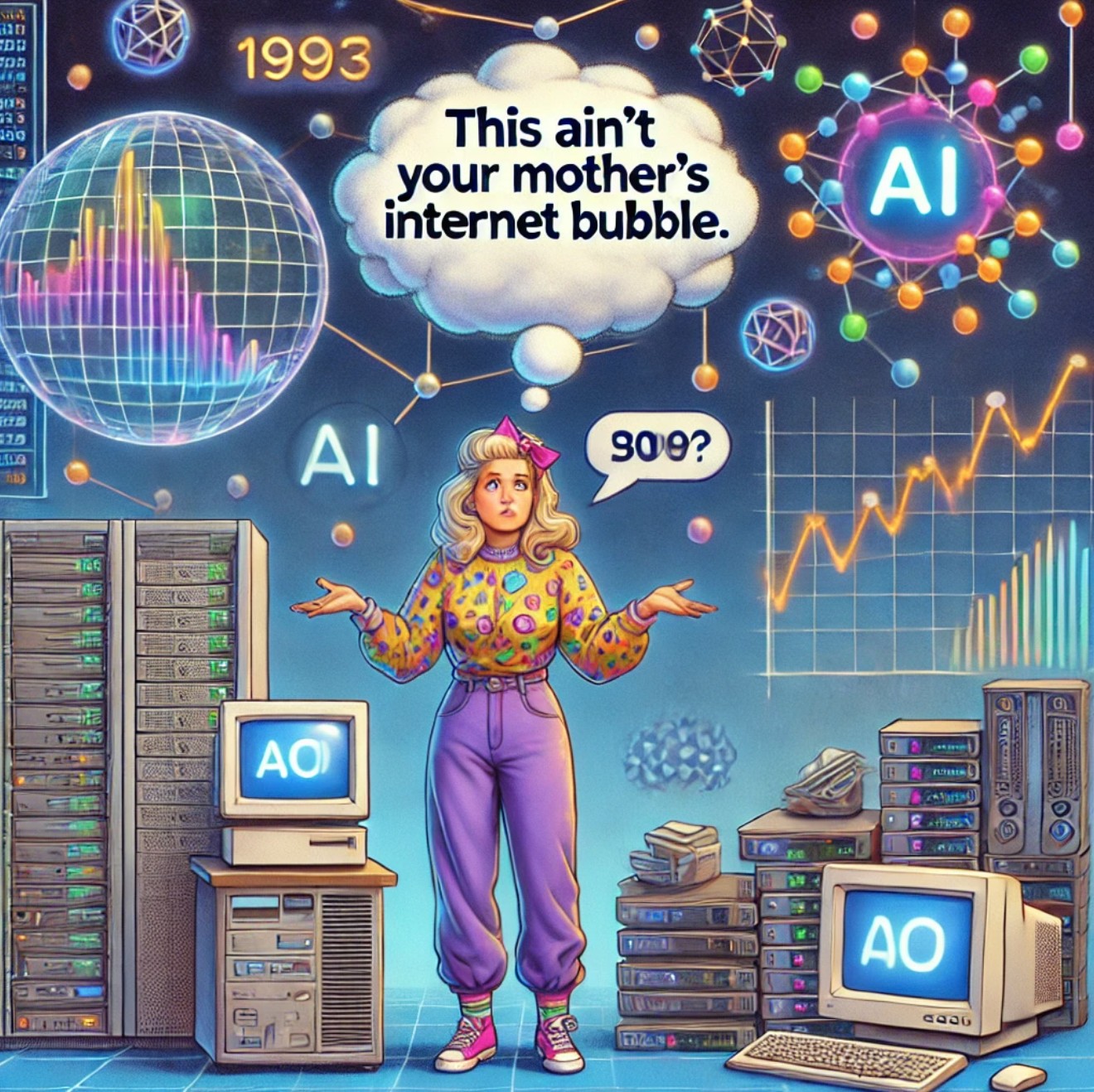

This Ain’t Your Mother’s Internet Bubble

By Don Yocham

Posted: January 21, 2025

Tariff Winners and Losers

By Don Yocham

Posted: January 17, 2025

The Odds Favor Growth

By Don Yocham

Posted: January 14, 2025

FREE Newsletters:

"*" indicates required fields